No no. It is not a Technical topic. I'll tell you one thing, I'm not a Technical Guy. I don't have any coding background, and I even don't understand it in the first place.

This topic "Technical SEO" is similar to On-page SEO. There are slight differences. let me go through in detail in the following article.

In Simple Words, it is a way to ensure your blog meets the technical requirements of the latest search engine Goals, which helps in ranking your blog high on organic search results.

Making a blog and website faster, easier to crawl, and understandable for search engines are the pillars of technical SEO.

But it is the opposite of off-page SEO, which is about generating exposure for a website through other channels.

These are some of the important elements that need to taken care of. They are crawling, indexing, rendering, Sitemap, Security, and many more.

- Crawling

- Indexing

- Sitemap

- Rendering

- Security

- Speed

- Mobile Friendly

- Duplicate Content

- Search Console

- Other stuff

Let's try to understand what are these and how they will help in ranking

1. Crawling

We often see how a spider crawls on its web. Rite. Similar way search engines like Google and Bing will have crawl all the content on the web.

Generally, crawler first finds new web pages, through hyperlinks. Then it retrieves a page, it saves all the URLs it contains.

The crawler then opens each of the saved URLs one by one to repeat the process: It analyses and saves further URLs.

This way, search engines use bots to find linked pages on the web.

Definition:

"A crawler is a piece of software that searches the internet and analyzes its contents."

Crawlers are mainly used by search engines like google and Bing to index websites.

Googlebot's frequently crawls each and every page on the internet. It may be Old or New.

The reason behind this is, by doing so they can find any new content or links that are attached to it.

An example of a web Crowler is "robots.txt". You may find this in your blog or website settings.

You can use the Robots Exclusion Standards to tell crawlers which pages of your website are to be indexed and which are not.

These instructions are placed in a file called robots.txt or can also be communicated via meta tags in the HTML header. Note, however, that crawlers do not always follow these instructions.

If you want to check all your indexed pages then go to google and type "site:yourdomain.com". It will show all the indexed pages on your site.

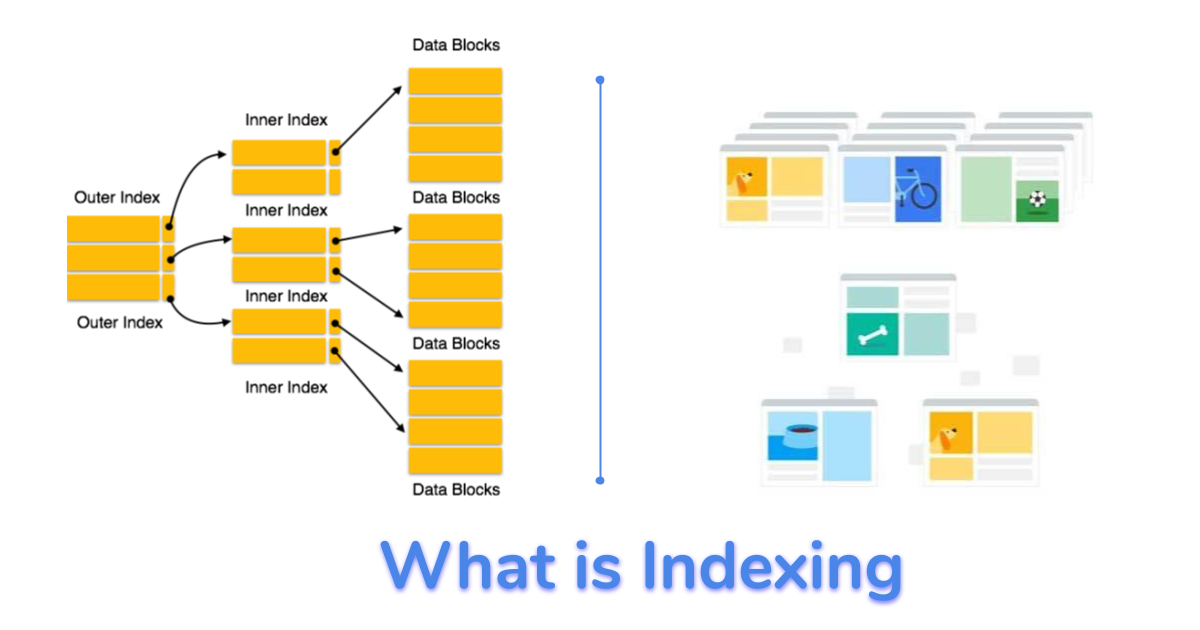

2. Indexing

As I explained Indexing is a process of Storing and organizing the content that was found during the crawling process.

Search engines process and store information they find in an index, a huge database of all the content they’ve discovered, and deem good enough to serve up to searchers.

If you think crawling is enough, for your site to be ranked properly on Google, then you are wrong.

After your site has been crawled, the next step is to make sure it can be indexed. The index is where your discovered pages are stored.

After a crawler finds a page, the search engine renders it just like a browser would.

In the process of doing so, the search engine analyzes that page's contents. All of that information is stored in its index.

Google and other search engine providers are constantly working on improving their indexing algorithms.

Google, for example, updates important features of its index at irregular intervals.

By introducing the Caffeine Index, Google made a fundamental change to how content is being indexed.

This update ensures that new content can be indexed and presented to users in a much faster way.

Especially web content that is updated regularly - such as podcasts or videos - can be found more quickly.

3. Sitemap

The sitemap is the single most important part of Technical SEO. It is also neglected by many bloggers. This step helps google to understand what is the flow that you are following.

This also helps Readers for quick and easy navigation.

Sitemap or XML sitemap is a list of all pages of your site. It serves as a roadmap for search engines on your site.

With it, you’ll make sure search engines won’t miss any important content on your site.

The XML sitemap is often categorized in posts, pages, tags, or other custom post types and includes the number of images and the last modified date for every page.

This map may vary from you and Me. Your site may be different from mine. However, this helps both users and the Search engine.

If you want to see your blog visually on the online click

here This will show what is linked to what and what are facing errors.

Here the link where you can build an XML sitemap for free

Link

4. Redirect

Often few pages or blog posts need to be removed or changed to another place. But Search engines don't know about that.

Google takes some time to understand. Meanwhile, your blog or site needs to show "404" or "401" Error.

Here is the example of the "404" Error page. This might happen in 2 cases. One id you might have deleted the Article. the other is you might have transferred or changed the article to another place.

Redirect is also look similar, but it will redirect the user to the new page.

5. Security

Did you ever came across this term "HTTPS"

This is the protective format for your site. You need to activate the SSL certificate from your Domain account site.

What is HTTPS?

Hypertext Transfer Protocol Secure is an extension of the Hypertext Transfer Protocol. It is used for secure communication over a computer network and is widely used on the Internet.

In HTTPS, the communication protocol is encrypted using Transport Layer Security or, formerly, Secure Sockets Layer.

HTTP vs HTTPS

A protocol is “HTTP” or “https” preceding your domain name. Google recommends that all websites have a secure protocol (the “s” in “https” stands for “secure”).

To ensure that your URLs are using the https:// protocol instead of HTTP://, you must obtain an SSL (Secure Sockets Layer) certificate. SSL certificates are used to encrypt data.

They ensure that any data passed between the web server and browser of the searcher remains private. As of July 2018, Google Chrome displays “not secure” for all HTTP sites, which could cause these sites to appear untrustworthy to visitors and result in them leaving the site.

6. Speed

Load time in both mobile and the laptop may also affect your ranking. Make sure your site ranks less than 3.5 seconds. Max 5 Seconds.

If it takes more than that you may switch to other sites. Which is not good, when your blog or site is new.

Past your site URL

here to know the load time and other site details.

This will affect your ranking both on google and other search engines.

7. Mobile Friendly

According to Google, more than 60% of searches came from Mobile. and most of the blogs are not optimized for Mobile-friendly views.

Nowadays google checks for mobile-friendly ness. If the site looks good on the laptop and on I pad, but not on Mobile then the blog won't rank high.

Your blog might lose valuable points from an organic ranking perspective.

Always check for mobile-friendly themes. If you think it is okay, then you are making a mistake. Do quality Check your self.

If you are using the chrome click the left button and at the bottom, there will be an inspect button. Click on that.

You'll get this view where you can test your website.

8. Duplicate Content

I often have to create Duplicate content. There is no way you can create unique content all the time. For example take this article, some of the points like "Speed", " Security". This is common in On-page SEO, the article also.

However, there is no Panility form google side, for duplicate content particularly on your site. But if you copy from other sites then, it is worthless.

Your blog may contain duplicate content, it is not a bigger problem. However, google need to know what to show for a particular request.

If there are 2 articles on the same name then, google will get confused and it'll show the most viewed articles on the top.

You may create the same content, simply changing the file name or headings. But Google can understand what is it about. If it gets confused then it will get hard to rank your article.

If you want to create any Duplicate content then add it in the middle of the article. Don't create an exact article.

9. Search Console

Google Search Console is the best way to optimize your site. This helps you in many ways. You can arrange your domains and subdomains.

Just like this bing has its own search console.

You can better understand your blog with these free tools. There are paid tools also available. But this is the best.

10. Other stuff

Always have one domain name. Ex: mine id "www.bloggingformulae.com". I use it all the places. I don't have any other domains, like .in or .org. This will lead o confusion.

I had a first-hand experience. To avoid all this confusion, and keep one domain for all.

You also need to structure your blog in such a way that the user should not do the treasure hunt. all the topics need to be at fingertips. Use dropdown menus and other options to navigate.

Just like user google and Bing need to understand the blog structure as well as content structure.

Conclusion

See there is no coding involved in this topic. This is just a Name. This process including "On-Page SEO" is very important to rank high on organic search results.

All these points are similar across all platforms. and if you want to optimize your blog, then you need to take care of all the pointe that we just discussed.

Now we're good with Technical SEO and On-page SEO lets move on to another interesting topic link building and content promotion. These two are very much necessary for beginner bloggers to get traffic.

Link building helps your blog or site to rank faster.

Let's move on to another important topic which is "Link Building "

Follow with me