Search engines like Google, Bing, and many others are like Liberians of the internet.

In General, what does a"Librarians" that we see in our schools or colleges do?

They are responsible for "Selecting", "Sorting", "Preserving'', and "Presenting" the material that we asked for.

In, One word there is no much difference between librarians that we see in our schools and the Librarian like Google & Bing that we use every day on the internet.

The only difference is Google and Bing do the same work that librarians do on a much larger scale. I mean Significantly Larger scale.

They are also called "Answering Machines" of the Internet.

For Example, if you write a blog, and if I want to read it, I have to search it on Google.

Google, To show search results, your content needs to first be visible to search engines. It means google needs to see it first.

Remember this, Google finding your article is the most important piece.

If your site can't be found, there's no way you'll ever show up in the Google Search Result.

Let's have a Look at this in detail.

How Search Engine Work?

There are three components that every Search Engine depends on. It may be Google, Bing, or Yahoo.

These three components are one and the same. They are

What are those?

1. Crawl: Hunt the Internet for content, looking over the URLs and Code for each site that they find.

2. Index: This will Store and organize Your content that was found during the crawling process.

3. Rank: Content will be ranked most relevant to the least relevant for the searches that you made.

Let's look In detail what are those.

1. What is Crawl / Crawling?

We often see how a spider crawls on its web. Rite. Similar way search engines like Google and Bing will have crawl all the content on the web.

Generally, crawler first finds new web pages, through hyperlinks. Then it retrieves a page, it saves all the URLs it contains.

The crawler then opens each of the saved URLs one by one to repeat the process: It analyses and saves further URLs.

This way, search engines use bots to find linked pages on the web.

Definition:

"A crawler is a piece of software that searches the internet and analyzes its contents."

Crawlers are mainly used by search engines like google and Bing to index websites.

Note: These crawlers are also used for data collection.

Then You might have this question. How do they crawl so many pages on the internet?

Crawlers are bots. Bots are programs that automatically perform defined, repetitive tasks.

This is not new to the internet.

In 1993 first web crawler called World Wide Web Wanderer was used to measure the growth of the internet.

Nowadays such bots are the backbone of the internet. They are the main reason why search engine optimization (SEO) is so popular part of online marketing.

Crawlers can find anything on the internet. Everything is in 0's & 1's.

Content may vary it could be a normal blog post, or a plain webpage, or an image, a video, a PDF, etc, but regardless of the format, content is discovered by links.

Googlebot's frequently crawls each and every page on the internet. It may be Old or New.

The reason behind this is, by doing so they can find any new content or links that are attached to it.

An example of a web Crowler is "robots.txt". You may find this in your blog or website settings.

You can use the Robots Exclusion Standards to tell crawlers which pages of your website are to be indexed and which are not.

These instructions are placed in a file called robots.txt or can also be communicated via meta tags in the HTML header. Note, however, that crawlers do not always follow these instructions.

If you want to check all your indexed pages then go to google and type "site:yourdomain.com". It will show all the indexed pages on your site.

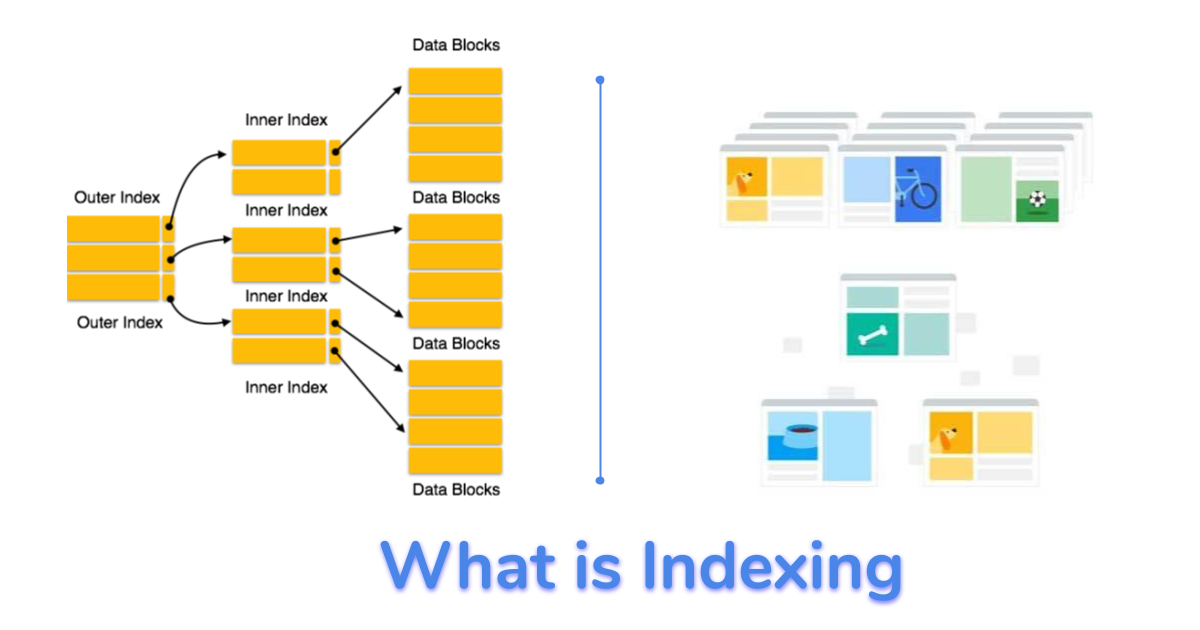

2. What is Index?

As I previously said Indexing is a process of Storing and organizing the content that was found during the crawling process.

Search engines process and store information they find in an index, a huge database of all the content they’ve discovered, and deem good enough to serve up to searchers.

If you think crawling is enough, for your site to be ranked properly on Google, then you are wrong.

After your site has been crawled, the next step is to make sure it can be indexed. The index is where your discovered pages are stored.

After a crawler finds a page, the search engine renders it just like a browser would.

In the process of doing so, the search engine analyzes that page's contents. All of that information is stored in its index.

How does content indexing work on the internet?

The indexing of web content is a complex and time-consuming process. It uses different methods from various scientific fields such as computer science and information science.

Leading search engines such as Google, Yahoo, and Bing rely on crawlers to index web content.

These are autonomous software programs that work similarly to robots, which is why they are often called search engine bots or robots.

Crawlers navigate through the internet and continuously add web content to the search engines' index. The content is sorted and divided into hierarchical levels using an algorithm.

This hierarchy is the main factor that determines the order in which search results are displayed when users enter a search query.

Google and other search engine providers are constantly working on improving their indexing algorithms.

Google, for example, updates important features of its index at irregular intervals.

By introducing the Caffeine Index, Google made a fundamental change to how content is being indexed.

This update ensures that new content can be indexed and presented to users in a much faster way.

Especially web content that is updated regularly - such as podcasts or videos - can be found more quickly.

The important requirement for indexation is that your website can be accessed and analyzed by search engine crawlers.

One way to ensure this is to include meta tags in the source code of your website.

Meta tags are usually placed in the head section of an HTML file and are primarily used to describe a website's content so that crawlers can better understand it.

There are two types ("Organic index and paid index") of indexing on the internet.

We generally see ads on google. Where paid results come on the top of search results followed by the organic results.

According to google more than 70% of users prefer organic index pages rather than Paid Index results.

3. How do search engines rank your site or blog?

Ranking means Content will be ranked most relevant to the least relevant for the searches that you made.

How do search engines ensure that when someone types a query into the search bar, they get relevant results in return?

That process is known as ranking, or the ordering of search results by most relevant to least relevant to a particular query.

To determine relevance, search engines use algorithms, a process, or formula by which stored information is retrieved and ordered in meaningful ways.

These algorithms have gone through many changes over the years to improve the quality of search results.

Google, for example, makes algorithm adjustments every day.

Some of these updates are minor quality tweaks, whereas others are core/broad algorithm updates deployed to tackle a specific issue, like Penguin to tackle link spam.

We normally see two kinds of results, one is Organic "which anyone would prefer" and the other is paid "that we see on the top of organic results".

Let's understand how organic and paid results work.

Organic vs Paid Results

If you want your site to be seen on the top of the search results, there are two ways. One is Rich and Quality content with good backlinks. and the other is paid ads.

Ranking organically on the top of the search results is not that easy. it takes time and energy. and Patience.

Organic search results are also called natural results that rank based 100% on merit. Organic results are of higher quality.

In simpler words, there’s no way to pay Google or other search engines to rank higher in the organic search results.

On the other hand, Paid search results are ads that appear on top of or underneath the organic results.

Paid results ranked by the amount paid, to the search engines to rank them on the top.

Paid ads are completely independent of the organic listings. Advertisers in the paid results section are “ranked” by how much they’re are willing to pay for a single visitor from a particular set of search results (known as “Pay Per Click Advertising”).

Now you understood, how paid and organic search results work. Rite.

There is one other factor that determines the ranking on Google. That is

Localized search

Localized search results are different from place to place. You don't want to know the KFC price in Paris if you are living in NewYork. rite.

Now you see, when I search for "KFC" in Newyork and pairs they both gave two unique results. That is why localized searches are important.

When it comes to localized search results, Google uses 7 main factors to determine the ranking:

- Relevance

- Distance

- Influence

- Reviews

- Keywords Bidding

- Organic ranking

- Local engagement

You and I don't want to master SEO or become an SEO, Expert.

Trust me. Every day google changes its algorithms. Every day is a new day for google.

For now, you should know how the search engine works.

Follow with me